You might have VMware running on VMC already and look to expand to GCVE or vise versa. Running VMware in a hybrid-cloud model not only provides higher resiliency, prevents vendor lock-in, but also offers opportunities to place your workloads based on your needs. This blog presents one design to interconnect VMC and GCVE over VPN connections.

1. Considerations for VMC and GCVE network connections

There are various design options to connect VMC and GCVE. In a separate blog post, I will get into the solution of using dedicated private connections. When you just get started to set up POC, routing traffic between these two VMware environments over VPN/internet is much faster and cheaper.

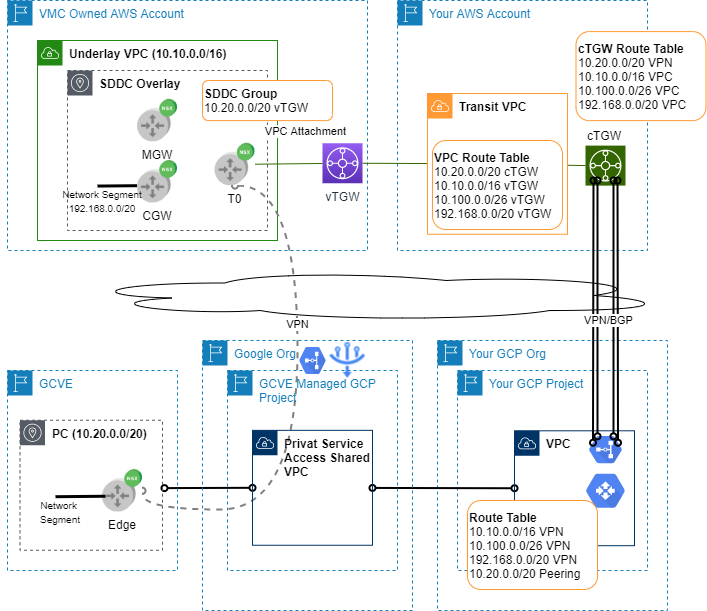

A straightforward design is to interconnect VMC and GCVE using VMware’s own VPN feature. Figure 1 shows this option as a dotted line. There are a few reasons I don’t recommend this design choice.

- IPsec VPN throughput. There’s no pulic performance data of VMware IPsec VPN. Don’t expect the number to be super impressive. Using VMware VPN also puts extra loads on NSX-T edge. In my design, I choose to use IPSec VPN service from Cloud Providers. VPN from both providers are very predictive. AWS VPN offers 1.25Gbps per tunnel. If site to site VPN is terminated on TGW, then two tunnels are active which increases bandwidth to 2.5Gbps. On top of that, muliple pairs of site to site VPN can be configured as ECMP paths. This not only further bumps up the throughput but improves network resiliency.

- HCX Support. HCX doesn’t work well with VMware’s own VPN. The limitation is mentioned here.

- Integration with future connection design. Once you decide to go down the dual vendor path after successful POC, it’s always a good idea to invest in private connections. The cloud VPN solution works out nicely as backup paths. Underlay is completely transparent to NSX-T in this design. There’s no change needed on NSX-T.

- Leverage additional services on native sides. There is good chance you have other native AWS and GCP services. They can share the same cloudVPN paths.

2. CloudVPN as Interconnect Option between VMC and GCVE

Figure 1 illustrates setup and high-level design ideas. There are three prefixes on VMC side. 10.10.0.0/16 is assigned to SDDC. 192.168.0.0/20 are reserved for regular workload subnets. A separate subnet of 10.100.0.0/26 is dedicated for HCX. On GCVE side, a 10.20.0.0/20 is reserved out of which the first /21 is broken down to various GCVE subnets.

2.1 VMC Related Setup

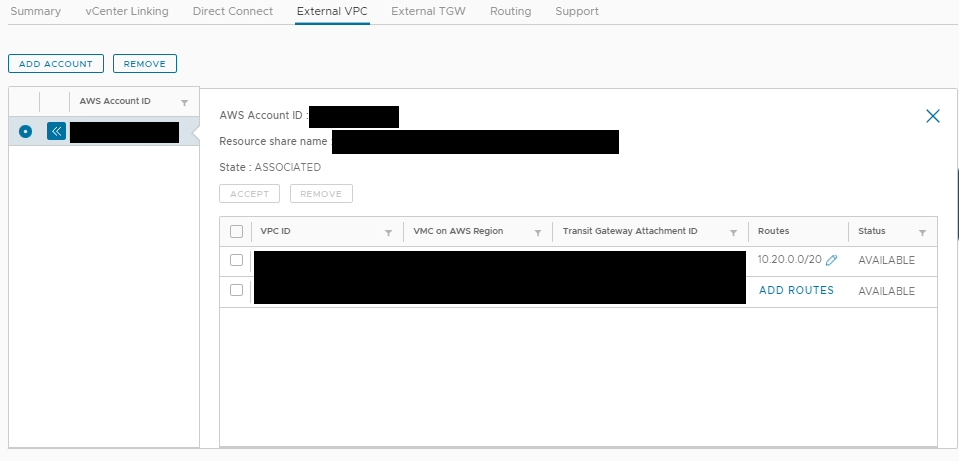

External VPC is a new SDDC Group feature introduced in VMC. It attaches external VPC to SDDC Group (vTGW) and allows configuration of static route pointing to external VPC. The external VPC is often referred to as transit VPC. Figure 2 is the VMC SDDC Group screen where GCVE subnet of 10.20.0.0/20 is configured. Detailed steps can be found in VMC docs.

2.2 AWS Related Setup

The first task on the AWS side is to configure site-to-site VPN. The VPN needs to terminate on TGW. Note the cTGW is owned by your AWS account. Using TGW, it’s fairly easy to redistribute VMC prefixes into BGP to GCP. The same is not possible if VGW is used for site-to-site VPN.

Once VPN is up and running, we can configure route tables. In VPC route table, four static routes are configured. 10.20.0.0/20 points to cTGW. The rest three VMC prefixes have the next hop of vTGW. In cTGW’s route table, 10.20.0.0/20 is learned through BGP from CGP. The three VMC prefixes are statically configured and redistributed into BGP.

2.3 GCP Related Setup

On GCP side, you would need to advertise 10.20.0.0/20 as custom IP range into VPN BGP sessions. Otherwise, follow standard GCVE network setup including peering with Google PSA VPC.

2.4 GCVE Related Setup

There is not much routing setup to perform on GCVE side. GCVE should pick up VMC prefixes automatically. Remember to check firewall configurations so traffic is allowed/blocked as expected.

2.5 DNS Setup

VMC management FQDNs are public domains even though you choose to put them on private addresses. They can be resolved by GCVE without any special configurations. To resolve GCVE FQDNs, you need to configure VMC DNS to condition forwarding. Every GCVE PC has its own DNS servers to manage its local domain names.

2.6 HCX Setup

You follow standard procedures to establish site pairing and service mesh. One minor note. HCX Manager has default HTTP proxy for licensing and upgrade. Since HCX manager on VMC side is on private IP, site pairing would fail. You need to work with GCVE support to add your HCX manager IP addresses to HTTP proxy exception list.

If you reach here, you are ready to extend network segments through HCX to the other side. You can also migrate/vMotion your VMs! Your network segments on VMC side can also reach those on GCVE side through L3.