VMC Cloud on AWS (VMC) and Google Cloud VMware Engine (GCVE) are two popular managed VMware-as-a-service platforms. There are comparisons online with regards to price, host specs, storage, availability regions. This blog provides deeper dive into the network aspects of VMC vs GCVE.

Underlay Network

When it comes to network, it’s the underlay that really differentiates the two platforms rather than NSX-T overlay. The two solutions take different approaches for integration with cloud infrastructures. For VMC, users might feel like working with two vendors, VMware and AWS, VMware, in turn, is viewed as a regular customer of AWS. VMware uses standard AWS network services. For GCVE, Google chooses to integrate NSX-T with Google’s internal data center network.

1. AWS network as VMC underlay

For VMC implementation, VMware tries to abstract away its underlay as much as possible from users. Still, the two are very tightly coupled. To have a successful deployment of VMC, a solid understanding of AWS network services is a must like Direct Connect, TGW, and VPC. As AWS network services evolve, VMware continues to introduce or has to tweak its network features.

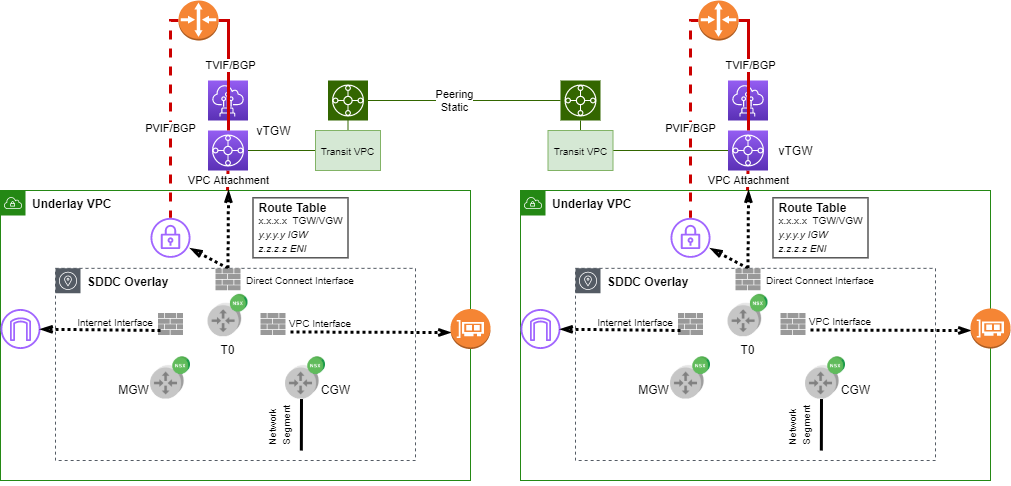

Figure 1 illustrates the main network integration points between VMC and AWS. Some approaches, like PVIF to VGW, reflect the early days of AWS Direct Connect. Others, like vTGW and transit VPC, are the latest VMC features. I’m sure when AWS rolls out new TGW features, peering among SDDCs and native VPCs would have a different look in VMC.

I have a few major pain points when running VNFs on AWS, not to mention heavyweight like NSX-T. AWS networking might be the real culprit here. There’s a lack of traditional routing protocol support like BGP. In VMC, T0 edge is the exit point between NSX-T and AWS VPC. Based on my AWS knowledge and real-world experience, there’s no dynamic routing protocol on any of its external interfaces. AWS API calls are used for route manipulations. Don’t be surprised if route propagation takes in the order of minutes.

The above limitation also leads to challenges of high availability design. Failover takes a longer time.

Yet another group of issues comes from various quotas imposed by AWS. Simply looking at Figure 1, all quotas on Route table, TGW, DXGW, Direct Connect could easily exceed one hundred. I had a few experiences where VMware failed to recognize some of the limits when my environments scale up. I plan to write another blog on all my fun stories with AWS quotas. There are also hard limits you need to consider your design at the very beginning.

2. GCP Network as underlay

GCVE’s underlay takes a completely different approach. I can not go into its implementation details. In nutshell, it’s more like NSX-T deployment in on-prem data center. Both ESXi switches and NSX-T components can attach to traditional VLANs as uplinks. More importantly, traditional protocols can be leveraged, pretty much from NSX-T edge all the way to on-prem routers. At high level, standard GCP network services primarily play the roles in connecting GCVE PCs in different regions or with customer’s on-prem/other clouds. As a matter of fact, GCVE takes care of most inter-region PC traffic. Customers only need to plug in their VPCs to PSA shared VPC at required regions. Overall, GCP network services are more loosely coupled with NSX-T when compared to VMC.

Unlike VMC where T0 edge is the only exit point, some traffic can leave GCVE NSX-T without going through edges. A good example is HCX traffic. In GCVE console, you can find dedicated VLANs for HCX. It’s also possible to put other types of traffic on such VLANs. In case NSX-T edge throughput is a bottleneck in your environment, the above feature can be a lifesaver.

Overlay

As mentioned at beginning of this blog, both platforms run standard NSX-T. Major supported features and implementation patterns are similar. With GCVE, users have full access to NSX-T. Theoretically, users have more knobs to play with. In reality, it becomes increasingly difficult for GCVE to support should there be too many one-off customizations from their standard patterns. Be mindful of the shared responsibility model in managed services.

Connection to Native side

Both VMC and GCVE offer standard features to connect to the respective native sides. This comes in handy if your services, or your SaaS vendors, run on the same cloud. If you need to connect across cloud boundaries, there are more network and security tasks to plan ahead.

Network visibility

Because VMC uses standard AWS network services for underlay, there are rich sets of telemetry data provided by those AWS services. Some of them are only visible to VMware though due to account ownership. GCVE definitely needs to ramp up visibility of their NSX-T underlay. When it comes to overlay, having full access to NSX-T in GCVE helps big time!

Don’t forget about Support

While both platforms continue to improve visibility and network intelligence, there are still enough network issues to work with their support teams. With VMC, keep in mind there’s one more party in the loop. Request to engage them when possible.